Preface

Large Data Centre Network Architecture

In order to meet the high-reliability demands of data centre services, a common design approach for modern data centre networks is to assume that network devices and links are unreliable. The goal is to ensure that when these unreliable devices or links fail, any negative impact on business operations can be minimized through self-healing mechanisms. As a result, the Leaf-Spine (Leaf: leaf node, Spine: spine node) networking architecture has become the standard for data centres. This CLOS multi-level switching network design creates a large number of equivalent devices and paths, effectively eliminating single points of failure. The network architecture offers high reliability, exceptional performance, and robust horizontal expansion (scale-out) capabilities.

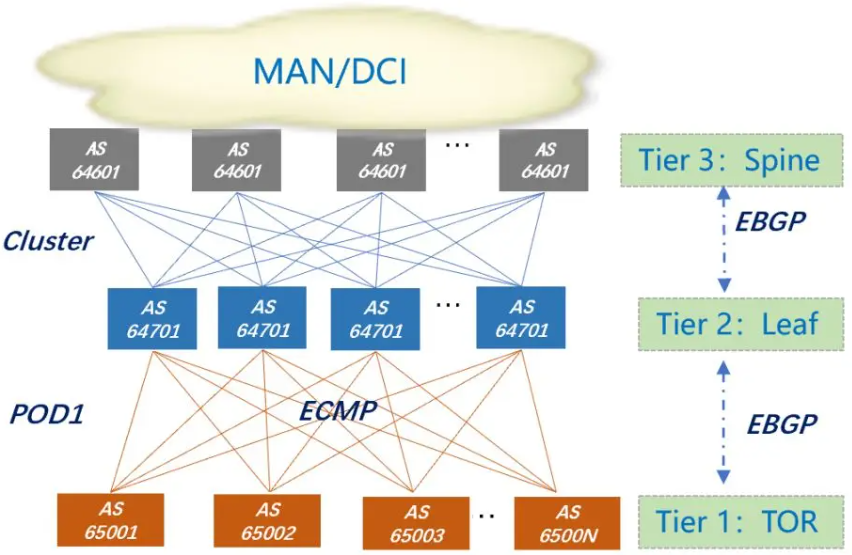

In this type of data centre architecture, the BGP routing protocol is often deployed across all layers of the CLOS network (including TOR, Leaf, Spine, and other devices in Figure 1) to create a simple, unified, and extremely large-scale network for the data centre. When deploying BGP, it's important to ensure that it meets the basic requirements for IPv4 and IPv6 routing transmission, as well as rapid convergence, flexible control, and expedient operation and maintenance capabilities.

"BGP Deployment Design Considerations"

In a typical three-tier CLOS data centre network, the BGP design points can be roughly divided into two parts:

1. BGP basic capability planning includes:

2. BGP operation and maintenance capability planning includes:

● Utilize the Bidirectional Forwarding Detection (BFD) protocol to expedite fault convergence.

● Ensure uninterrupted business capabilities.

BGP Basic Capability Planning

1. AS Number Planning

The previous BGP version (defined in RFC1771) assigns the AS number with a length of 2 bytes, of which 1023 AS numbers (64512~65534) are reserved for private use. This is insufficient to handle the tens of thousands of network elements in large IDCs. There are currently two solutions to this problem:

● The new "BGP Support for Four-octet AS Number Space" defines a 4-byte BGP AS number, expanding the number of AS numbers to be as plentiful as IPv4 addresses. This provides a range of 90 million (4,200,000,000~4,294,967,294) available for private AS. This is adequate for assigning an independent AS number to each network device or even each host in the IDC.

"The following is an example of a recommended AS number assignment:

|

Device Role

|

AS Planning Principles

|

Allocation method

|

Allocation Example

|

|

TOR

|

Unique within a Pod, can be repeated in different P0Ds (TOR's AS number will not be transferred across PODs

|

65000+TOR number

|

The first TOR is 65001 and the second TOR is 65002

|

|

Leaf

|

All Leafs in the same P0D have the same number.

|

64700+P0D No.

|

The first POD is 64701 and the second POD is 64702

|

|

SPINE

|

The only one in MAN

|

Global planning reservation

|

For example, XX cluster/park 64601

|

|

MAN

|

Intranet only

|

Global planning reservation

|

For example, XXMAN is 64513

|

2. BGP Basic Parameter Configuration

In a data centre, fast fault convergence is crucial, so it is recommended to use a BGP timer configuration of 1 second for keepalive and 3 seconds for hold timers to speed up convergence. BGP also has another important timer known as the Advertisement Interval, which determines the interval for publishing route announcements. By default, the BGP announcement interval is set to 30 seconds. However, in a data centre environment, immediate announcement of changes is necessary, so the recommended configuration for the Advertisement Interval is 0 seconds.

For Ruijie RGOS software, the BGP timer settings need to be configured within the BGP process.

|

Configuration Commands

|

Notes

|

|

timers bgp 1 3

|

BGP keepalive/hold time 1/3S

|

|

neighbour XX advertisement-interval 0

|

The interval for sending routing advertisements is 0 seconds

|

Other recommended configurations:

3. BGP ECMP

BGP can form equal-cost routes by enabling the "multipath" feature. For example, in Ruijie RGOS, the following configurations need to be made:

|

Configuration Commands

|

Notes

|

|

maximum-paths ebgp 32

|

The maximum number of BGP equal-cost routes is 32 (recommended on TOR) and the recommended number on Leaf is 64.

|

The previous section only enables the multi-path capability of BGP. Next, we need to apply BGP routing principles to add the next hops of multiple links to the routing table to create ECMP (Equal Cost Multi-Path) routing. Among these principles, the criteria for two routes to be considered equal and perform load balancing is that the first eight conditions are the same. In the BGP planning of the data centre, only AS_PATH needs consideration for these conditions, as the others are either identical in the IDC or irrelevant.

The AS-PATH attribute requires accurate comparison by default. An equal-cost path can only be formed when the length of the AS-PATH and the specific AS Number are the same. Based on the previous AS Number planning, each TOR has a different AS number. Consequently, the southbound route from the Leaf to the two TOR devices in the same group cannot achieve load balancing. To resolve this issue, AS-PATH loose comparison needs to be enabled on the Leaf device. For example, using Ruijie RGOS, the following configuration is required:

|

Configuration Commands

|

Notes

|

|

BGP best path as-path multipath-relax

|

Compare only the AS-PATH length instead of the specific value of the AS-PATH.

|

In the previous AS planning, it was noted that all Leaf devices in the same POD have the same AS number. This means that no matter which Leaf device sends the route, the AS-PATH seen on TOR is always the same. Therefore, there is no need to enable loose comparison on the Leaf model.

Additionally, there are many equal-cost neighbours between the Leaf and TOR with completely consistent configuration policies. It is recommended to use the BGP peer-group function to simplify configuration in actual deployment.

Implement this function and perform the following configuration on Ruijie RGOS:

|

Configuration Commands

|

Notes

|

|

neighbour abc peer-group

|

Create peer group abc

|

|

neighbour 【neighbourlP】 peer-group abc

|

Add neighbour to peer group abc

|

4. BGP Route Attribute Planning

5. Establish routing rules

Figure 4: Multiple groups of TOR + Leaf form a POD (Point of Delivery, the basic physical design unit of a data centre). The spine is responsible for horizontally connecting multiple PODs, while MAN/DCI provides cross-regional interconnection. IDC's BGP routing planning recommendations are as follows:

At present, the TOR layer increasingly uses de-stacking technology to achieve server dual homing (refer to the Technology Feast: “How to De-Stack Data centre Network Architecture”). In the de-stacking scenario, Leaf receives a large number of host routes from the ToR switch (depending on the number of hosts in the Pod, which may be tens of thousands). Leaf transmits host routes between TORs, which may cause the TOR switch routing capacity to exceed its limit. Therefore, it is necessary to implement a strategy in the receiving direction of TOR to filter out host routes sent by other TORs.

6. BGP Dual Stack Planning

BGP supports multiple protocols and can handle v4/v6 dual stack in the same BGP process. The general practice is to establish separate BGP sessions for BGP v4 and v6 neighbours, but this doubles the configuration and maintenance workload. BGP v4 update messages can be sent through the TCP connection established by v6, and vice versa i.e., a single connection allows message announcements from multiple protocol families.

Figure 5: Ruijie Networks provides an optimization solution where only a single session is established to carry dual-stack routing. This simplifies configuration, saves IP addresses, and reduces the performance consumption of deploying protocols such as BFD for BGP by half.

Planning BGP Operation and Maintenance Capability

1. Use BFD technology to accelerate BGP network convergence

BFD can provide millisecond-level detection accuracy. By linking with BGP, it can achieve rapid convergence of BGP routes and ensure business continuity. It is recommended to enable BFD for BGP in the data centre IDC. Considering the performance of the device, it is advised to use a 300ms*3 configuration when all ports are enabled.

Taking Ruijie RGOS software as an example, the main configuration of BFD is as follows:

|

Configuration Commands

|

Notes

|

|

neighbour XX fall-over bfd

|

Enabling BFD

|

|

BFD interval 300 min rx 300 multiplier 3

|

300ms detection cycle, timeout if no notification is given 3 times

|

2. Uninterrupted Service Capability - Fast Switching of BGP

3. Uninterrupted service capability - BGP NSR

NSR (Non-Stop Routing) is designed to ensure uninterrupted routing during the protocol restart when the switch management board switches between active and standby. When the NSR function is enabled, the TCP NSS (Non-Stop Service) service is activated to back up related neighbours and routing information to the standby board. During the active and standby switching of the management board, the NSR function keeps the network topology stable, maintains neighbour status and forwarding table, and ensures that key services are not interrupted.

4. Uninterrupted Service Capability - Smooth Exit and Delayed Release of BGP

Then, delay for a certain period to ensure that route learning is completed, and disconnect the BGP connection with the neighbouring device.

● BGP delayed release: When the device restarts, there may be a situation where the routing table has not been sent to the local hardware table, but the routing information is announced to the neighbour, thereby diverting traffic prematurely and causing abnormal traffic forwarding. To avoid this problem, you can set BGP to adjust the published routes to the lowest priority when the entire machine restarts. It is recommended to pre-configure this capability in the device. Taking Ruijie RGOS as an example, you need to configure:

|

Configuration Commands

|

Notes

|

|

BGP advertises the lowest priority on startup: 120.

|

The duration for publishing low-priority routes is 120 seconds and can be configured.

|

Summary

In our ongoing efforts to enhance BGP performance and streamline operations, we are excited to announce a series of upcoming discussions focused on BGP optimization and advanced operational features. These sessions will provide valuable insights and practical strategies aimed at improving network efficiency and reliability. We encourage you to look forward to these informative articles at the forthcoming technology feasts, where we will delve deeper into these critical topics. Your participation will be instrumental in fostering an engaging dialogue on best practices and innovative solutions within our community.

Related Blogs:

Exploration of Data Center Automated Operation and Maintenance Technology: Zero Configuration of Switches

Technology Feast | How to De-Stack Data Center Network Architecture

Technology Feast | A Brief Discussion on 100G Optical Modules in Data Centers

Research on the Application of Equal Cost Multi-Path (ECMP) Technology in Data Center Networks

Technology Feast | How to build a lossless network for RDMA

Technology Feast | Distributed VXLAN Implementation Solution Based on EVPN

Exploration of Data Center Automated Operation and Maintenance Technology: NETCONF

Technical Feast | A Brief Analysis of MMU Waterline Settings in RDMA Network

Technology Feast | Internet Data Center Network 25G Network Architecture Design

Technology Feast | The "Giant Sword" of Data Center Network Operation and Maintenance

Technology Feast: Routing Protocol Selection for Large Data Centre Networks

Featured blogs

- Ruijie RALB Technology: Revolutionizing Data Center Network Congestion with Advanced Load Balancing

- CXL 3.0: Solving New Memory Problems in Data Centres (Part 2)

- Multi-Tenant Isolation Technology in AIGC Networks—Data Security and Performance Stability

- Multi-dimensional Comparison and Analysis of AIGC Network Card Dual Uplink Technical Architecture

- A Brief Discussion on the Technical Advantages of the LPO Module in the AIGC Computing Power Network